应用关联规则、聚类方法等数据挖掘技术分析治疗抑郁症的中药专利复方组方配伍规律。

方法检索治疗抑郁症中药专利复方,排除外用中药及中西药物合用的复方。最近我们被要求撰写关于用药规律的研究报告,包括一些图形和统计输出。

在中医药领域,专利复方凝聚了众多中医专家的智慧和临床经验,是中医药宝库中的珍贵财富。深入挖掘这些中药专利复方的治疗用药规律,对于传承和发展中医药学、提高临床治疗效果以及开发新药具有重要意义。

随着信息技术的飞速发展,数据挖掘技术为中医药研究提供了新的视角和方法。其中,Apriori 关联规则算法能够发现中药之间的潜在关联关系,揭示哪些中药常常一起使用,为中医临床配伍提供理论支持;K – means 均值聚类算法则可以将具有相似用药模式的中药专利复方进行分类,帮助我们从宏观上把握不同类型复方的用药特点。

对入选的中药专利复方进行术语规范化等处理,抽取信息、建立表,应用数据分析软件R对数据进行关联规则分析,应用网络分析软件进行聚类分析。

查看数据

转换成二值矩阵数据

colnames(data) <- paste0("X",1:ncol(data))

database <- NULL

for(i in 1:nrow(data)) {

tmp <- integer(length(total_types))

建立apriori

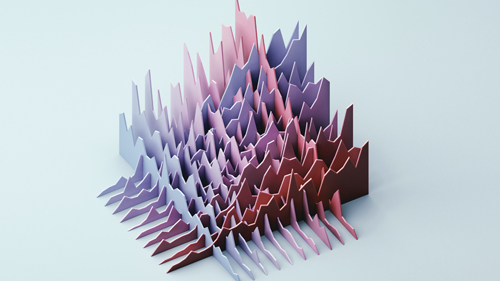

plot(all_rules, method = "graph")

中药专利复方中药对的关联规则分析

药对是方剂配伍的基本形式,它反映了中药之间相辅相成、相反相成、同类相从等配伍关系。

药对中的中药在组方配伍时具有在处方中同时出现的特点,因此在关联规则分析中,分析置信度较大且双向关联的规则即可得到药对。

根据置信度和支持度筛选强关联规则

K-means均值网络聚类分析

抑郁症中药专利复方中药物之间形成了一个复杂的配伍关系网络,关联规则分析可以用来发现其中的药对及强关联规则,但随着支持度和置信度阈值参数的降低,关联规则大量涌现,使得其中的配伍规律变得难以分析,应用网络聚类方法可以有效地发现其中的配伍规律。

最受欢迎的见解

1.R语言分布式滞后非线性模型(DLNM)空气污染研究温度对死亡率影响建模

2.R语言分布滞后线性和非线性模型(DLNM)分析空气污染(臭氧)、温度对死亡率时间序列数据

3.R语言群组变量选择、组惩罚GROUP LASSO套索模型预测分析新生儿出生体重风险因素数据和交叉验证、可视化

4.R语言逻辑回归、随机森林、SVM支持向量机预测FRAMINGHAM心脏病风险和模型诊断可视化

5.R语言非线性混合效应 NLME模型(固定效应&随机效应)对抗哮喘药物茶碱动力学研究

6.R语言使用限制平均生存时间RMST比较两条生存曲线分析肝硬化患者

#聚类类别号

kmod$cluster

Python用Seedream4.5图像生成模型API调用与多场景应用|附代码教程

Python用Seedream4.5图像生成模型API调用与多场景应用|附代码教程 Python用SentenceTransformer、OLS、集成学习、模型蒸馏情感分类金融新闻文本|附代码数据

Python用SentenceTransformer、OLS、集成学习、模型蒸馏情感分类金融新闻文本|附代码数据 SPSS与Python用Resblock优化BP神经网络分析慢性胃炎病历数据聚类K-means/AGNES、关联规则挖掘及预测

SPSS与Python用Resblock优化BP神经网络分析慢性胃炎病历数据聚类K-means/AGNES、关联规则挖掘及预测

1 comment on “R语言Apriori关联规则、K-means均值聚类数据挖掘中药专利复方治疗用药规律网络可视化”