暗网市场是一个网上黑市,从2014年1月运行,直到上周的周三,当它突然消失了。几天后, 一篇reddit帖子中发布了 包含该网站成立以来的每日wget抓取。我对数据集进行了一些 分析 :

产品可以根据销售者进行分类

在Evolution上,有一些顶级类别(“药品”,“数字商品”,“欺诈相关”等)细分为特定于产品的页面。每个页面包含不同供应商的几个列表。

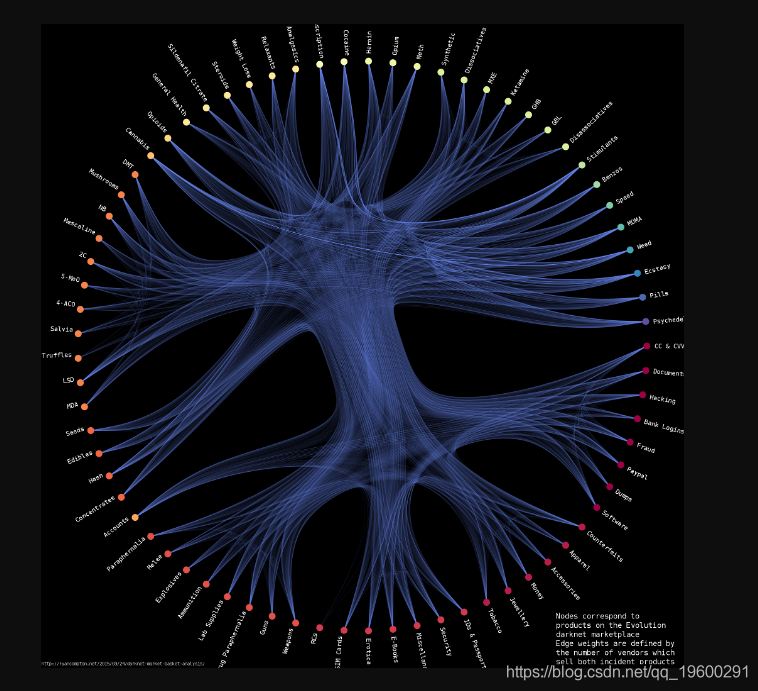

我根据供应商同现关系在产品之间建立了一个图表,即每个节点对应于一种产品,其边权重由同时出售两种事件产品的供应商数量定义。因此,举例来说,如果有3个供应商同时出售甲斯卡林和4-AcO-DMT,那么我的图在甲斯卡林和4-AcO-DMT节点之间的权重为3。我使用 基于随机块模型的分层边缘 实现来生成以下Evolution产品网络的可视化:

importimport pandaspandas asas pdpd

importimport graph_toolgraph_t as gt

import graph_tool.draw

import graph_tool.community

import itertools

import collections

import matplotlib

import math

In [2]:

df = pd.read_csv('/home/aahu/Downloads/evolution/evolution/products_vendors.tsv',sep='\t')

#discard meta-categories"

meta_cats = ['Other','Drugs','Guides & Tutorials','Fraud Related',

'Services','Digital Goods','Electronics', 'Custom Listings']

df = df[df['category'].map(lambda x:x not in meta_cats)]

In [3]:

#df['count'] = df.groupby(['vendor','category']).transform('count').index

#build graph-tool ids

node_lbs = {}

rev_node_lbs = {}

for idx,vendor in enumerate(df['category'].drop_duplicates()):

node_lbs[vendor] = idx

rev_node_lbs[idx] = vendor

df['id'] = df['category'].map(lambda x:node_lbs[x])

In [4]:

edge_list = []

dfg = df.groupby('vendor')

for name,group in dfg:

ei = itertools.combinations(group['id'].drop_duplicates(),2)

for e in ei:

edge_list.append(tuple(sorted(e)))

#filter edges by num shared vendors

MIN_SHARED_VENDORS=1

c = collections.Counter(edge_list)

edge_list = [e for e in c if c[e]>=MIN_SHARED_VENDORS]

#build graph

g = gt.Graph(directed=False)

g.add_edge_list(edge_list)

g.vertex_properties['label'] = g.new_vertex_property('string')

for v in g.vertices():

g.vertex_properties['label'][v] = rev_node_lbs[g.vertex_index[v]]

print('g vert/edges: ',g.num_vertices(), g.num_edges())

#add edge weight property

g.edge_properties['weight'] = g.new_edge_property('double')

g.edge_properties['color'] = g.new_edge_property('vector<double>')

for e in g.edges():

w = c[tuple(sorted([e.source(),e.target()]))]

g.edge_properties['weight'][e] = w

alpha = (float(w)/max(c.values())) + .025

g.edge_properties['color'][e] = [103/255.0,134/255.0,239/255.0,alpha]

g vert/edges: 73 2219

In [10]:

state = gt.community.minimize_nested_blockmodel_dl(g,deg_corr=False,

eweight=g.ep['weight'])

bstack = state.get_bstack()

t = gt.community.get_hierarchy_tree(bstack)[0]

tpos = pos = gt.draw.radial_tree_layout(t, t.vertex(t.num_vertices() - 1), weighted=True)

cts = gt.draw.get_hierarchy_control_points(g, t, tpos,beta=.87)

pos = g.own_property(tpos)

b = bstack[0].vp["b"]

#text rotation

text_rot = g.new_vertex_property('double')

g.vertex_properties['text_rot'] = text_rot

for v in g.vertices():

if pos[v][0] >0:

text_rot[v] = math.atan(pos[v][1]/pos[v][0])

else:

text_rot[v] = math.pi + math.atan(pos[v][1]/pos[v][0])

print('saving to disk...')

gt.draw.graph_draw(g, pos=pos, vertex_fill_color=b,

edge_control_points=cts,

vertex_size=20,

vertex_text=g.vertex_properties['label'],

vertex_text_rotation=g.vertex_properties['text_rot'],

vertex_text_position=1,

vertex_font_size=20,

vertex_font_family='mono',

vertex_anchor=0,

vertex_color=b,

vcmap=matplotlib.cm.Spectral,

ecmap=matplotlib.cm.Spectral,

edge_color=g.edge_properties['color'],

bg_color=[0,0,0,1],

output_size=[1024*2,1024*2],

output='/home/aahu/Desktop/evo_nvends={0}.png'.format(MIN_SHARED_VENDORS))

saving to disk...

它包含73个节点和2,219个边缘(我在数据中找到了3,785个供应商)。

# coding: utf-8

from bs4 import BeautifulSoup

import re

import pandas as pd

import dateutil

import os

import logging

FORMAT = '%(asctime)-15s %(levelname)-6s %(message)s'

DATE_FORMAT = '%b %d %H:%M:%S'

formatter = logging.Formatter(fmt=FORMAT, datefmt=DATE_FORMAT)

handler = logging.StreamHandler()

handler.setFormatter(formatter)

logger = logging.getLogger(__name__)

logger.addHandler(handler)

logger.setLevel(logging.INFO)

DATA_DIR='/home/aahu/Downloads/evolution/evolution/'

def html_to_df(fname, fdate):

"""

parse an evolution catergory html file

must spec file date (it doesn't appear in the html)

"""

logger.info('processing: {}'.format(fname))

soup = BeautifulSoup(open(fname))

profs = soup.find_all(href=re.compile('http://k5zq47j6wd3wdvjq.onion/profile/.*'))

l = []

for p in profs:

if p.text != 'simurgh': # Welcome back simurgh!

d = {}

d['vendor'] = p.text.strip()

d['product'] = p.fetchPrevious()[1].text.strip()

d['category'] = soup.title.text.strip().split('::')[1].strip()

d['date'] = fdate

l.append(d)

return pd.DataFrame(l)

def catdir_to_df(catdir, fdate):

fs = os.listdir(catdir)

fs = map(lambda x: os.path.join(catdir,x),fs)

l = [html_to_df(f,fdate) for f in fs]

return pd.concat(l).reindex()

def main():

for datestr in os.listdir(DATA_DIR):

d1 = os.path.join(DATA_DIR, datestr)

fdate = dateutil.parser.parse(datestr)

catdir = os.path.join(d1,'category')

if os.path.exists(catdir):

logger.info(catdir)

df = catdir_to_df(catdir, fdate)

outname ='category_df_'+datestr+'.tsv'

df.to_csv(os.path.join(DATA_DIR,outname),'\t',index=False)

if __name__=='__main__':

main()

权重较高的边缘绘制得更明亮。节点使用随机块模型进行聚类,并且同一聚类中的节点被分配相同的颜色。图的上半部分(对应于毒品)和下半部分(对应于非毒品,即武器/黑客/信用卡/等)之间有明显的分界。这表明销售毒品的供应商销售非毒品的可能性较小,反之亦然。

91.7%的出售速度和MDMA的供应商也出售了摇头丸

关联规则学习是解决市场篮子分析问题的一种直接且流行的方法。传统的应用是根据其他顾客的购物车向购物者推荐商品。由于某些原因,典型的例子是“购买尿布的顾客也购买啤酒”。

我们没有来自Evolution上公开帖子的抓取的客户数据。但是,我们确实拥有每个供应商所销售产品的数据,可以帮助我们量化上述视觉分析所建议的结果。

这是我们的数据库的示例(完整的文件有3,785行(每个供应商一个)):

| Vendor | Products |

|---|---|

| MrHolland | [‘Cocaine’, ‘Cannabis’, ‘Stimulants’, ‘Hash’] |

| Packstation24 | [‘Accounts’, ‘Benzos’, ‘IDs & Passports’, ‘SIM Cards’, ‘Fraud’] |

| Spinifex | [‘Benzos’, ‘Cannabis’, ‘Cocaine’, ‘Stimulants’, ‘Prescription’, ‘Sildenafil Citrate’] |

| OzVendor | [‘Software’, ‘Erotica’, ‘Dumps’, ‘E-Books’, ‘Fraud’] |

| OzzyDealsDirect | [‘Cannabis’, ‘Seeds’, ‘MDMA’, ‘Weed’] |

| TatyThai | [‘Accounts’, ‘Documents & Data’, ‘IDs & Passports’, ‘Paypal’, ‘CC & CVV’] |

| PEA_King | [‘Mescaline’, ‘Stimulants’, ‘Meth’, ‘Psychedelics’] |

| PROAMFETAMINE | [‘MDMA’, ‘Speed’, ‘Stimulants’, ‘Ecstasy’, ‘Pills’] |

| ParrotFish | [‘Weight Loss’, ‘Stimulants’, ‘Prescription’, ‘Ecstasy’] |

关联规则挖掘是计算机科学中的一个巨大领域–在过去的二十年中,已经发表了数百篇论文。

我运行的FP-Growth算法的最小允许支持为40,最小允许置信度为0.1。该算法学习了12,364条规则。

| 规则前项 | 后项 | 支持度 | 置信度 |

|---|---|---|---|

| [‘Speed’, ‘MDMA’] | [‘Ecstasy’] | 155 | 0.91716 |

| [‘Ecstasy’, ‘Stimulants’] | [‘MDMA’] | 310 | 0.768 |

| [‘Speed’, ‘Weed’, ‘Stimulants’] | [‘Cannabis’, ‘Ecstasy’] | 68 | 0.623 |

| [‘Fraud’, ‘Hacking’] | [‘Accounts’] | 53 | 0.623 |

| [‘Fraud’, ‘CC & CVV’, ‘Accounts’] | [‘Paypal’] | 43 | 0.492 |

| [‘Documents & Data’] | [‘Accounts’] | 139 | 0.492 |

| [‘Guns’] | [‘Weapons’] | 72 | 0.98 |

| [‘Weapons’] | [‘Guns’] | 72 | 0.40 |

非常感谢您阅读本文,有任何问题请在下面留言!

1

1

关于作者

Kaizong Ye是拓端研究室(TRL)的研究员。

本文借鉴了作者最近为《R语言数据分析挖掘必知必会 》课堂做的准备。

SPSS modeler关联规则、卡方模型探索北京平谷大桃产业发展与电商化研究

SPSS modeler关联规则、卡方模型探索北京平谷大桃产业发展与电商化研究 R语言、WEKA关联规则、决策树、聚类、回归分析工业企业创新情况影响因素数据

R语言、WEKA关联规则、决策树、聚类、回归分析工业企业创新情况影响因素数据 SPSS modeler用关联规则Apriori模型对笔记本电脑购买事务销量研究

SPSS modeler用关联规则Apriori模型对笔记本电脑购买事务销量研究

1 comment on “python暗网市场关联规则学习菜篮子分析”